Distrubuted File System (Replication) was originally concieved for use in synching up 'sysvol' data on Domain Controllers on Active Directory estates and is still used to this date.

There are many ways of tweaking DFSr to get the best from it. In this article I will go through and explain how to get the best from your DFSr configuration.

Recommended Settings for DFSr:

File Server Specs - This is all based on VMs

- 4 core cpu

- 8-16GB memory

- Data volumes should have enough space to handle replicated data along with staging data

- Gigabit Ethernet is recommended, if multiple network cards are available, teaming is also recommended

- You should consider only one replicated folder per drive, this is useful in case you face any issues like heavy backlog or DFSR database corruption and need to clear out a DFSR database from system volume information. In this case, if two replicated folders are on the same drive and the issue exists with a single folder, DFSR Database will be wiped out for both folders and both folders would have to undergo initial replication which can take a considerable amount of time if the data size is large.

- If you are creating replication groups for existing data, or if you are adding a new replicated member to an existing replicated group, and if the data size is bigger (in TBs or in few hundred GBs), an initial sync can take a substantial amount of time to complete, due to the complexities involved in initial sync process.

- (Optional) Preseed copy’s data (using the Robocopy tool) from the source server along with NTFS security to the destination server in advance. This process will save time to replicate the data over wire. However, you still need time for staging as at the DFSR stage, every file is copied with a preseeding and generated a hash of the file and store within the database for initial sync. This process is local to DFSR member. To save this staging time, we need to use database cloning further.

- The initial sync must be completed before data can be replicated back and forth and the process can take many days, depending upon the data size being synced. To save time, we can preseed data followed by DFSR database cloning.

Staging

- For the initial copy of the file share it is recommended to set the staging folder as large as possible. ie if the folder is 50GB in size the staging folder should be set to 50GB

- The staging folder should be (ideally) on a different spindle to prevent disk contention

- If the replication folder cannot be set to the same size then set it to the size of the five largest files in the folder (run treesize on the original data store to determine the largest file sizes)

- Monitor the DFS event log for staging clean-up events (for example, event 4208 followed by 4206, which means that it was not possible to stage a file due to lack of space and no further clean-up was possible) If more than a few such event-pair occurs every hour, then increase the size of staging by 50%.

Check the DFSr event log for errors

- event 4208 followed by 4206, which means that it was not possible to stage a file due to lack of space and no further clean-up was possible)

- frequent clean-up events (4202 followed by 4204). If more than a few such event-pair occurs every hour, then increase the size of staging by 50%.

Set up Monitoring for DFSr Replication

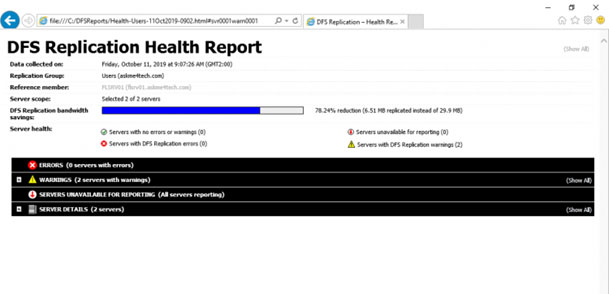

How to check Replication Status with DFS Management from Health Reports

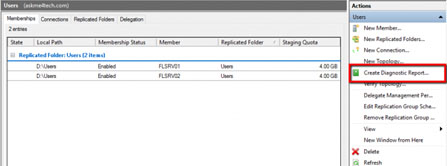

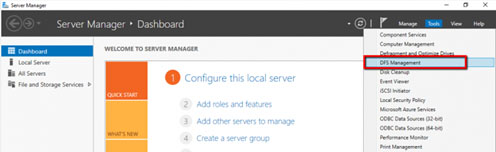

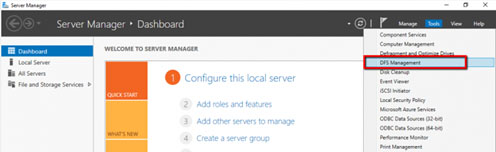

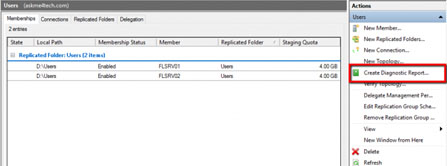

- From Server Manager click in Tools — DFS Management

- From the right side click Create Diagnostic Report

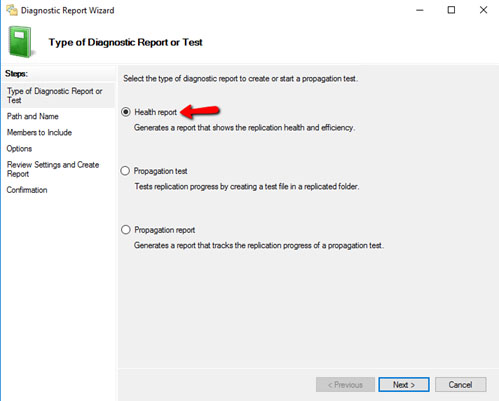

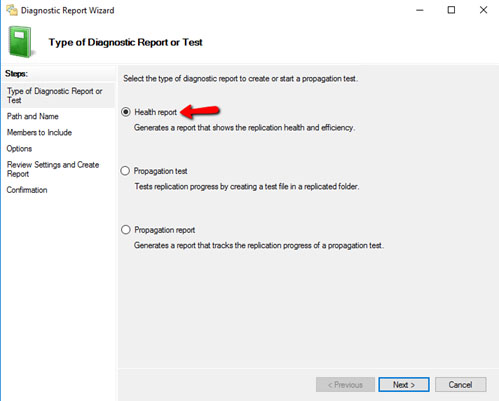

- Select Health Report. Click Next

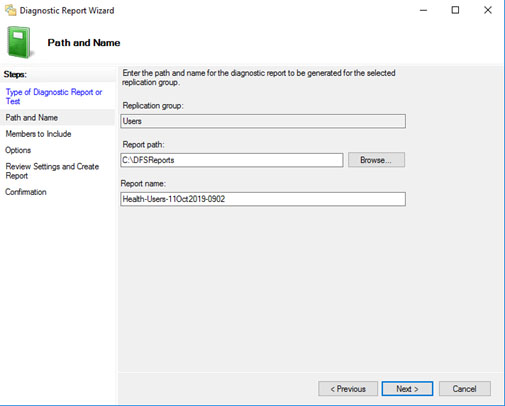

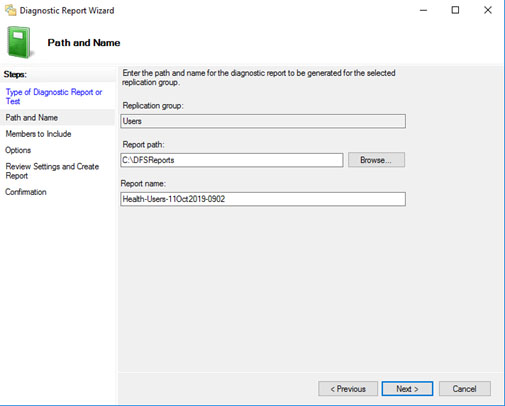

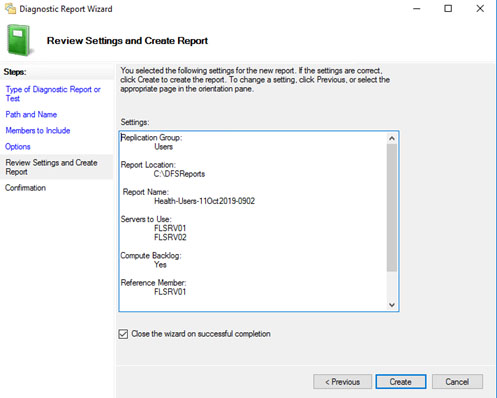

- Select where do you want to export the Report. Click Next

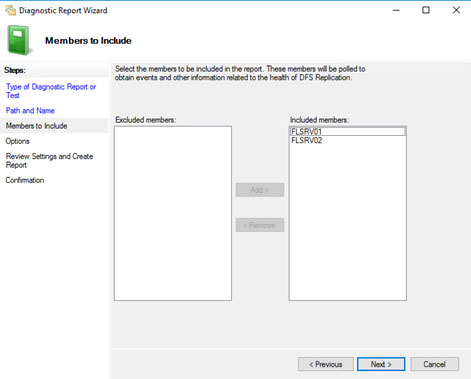

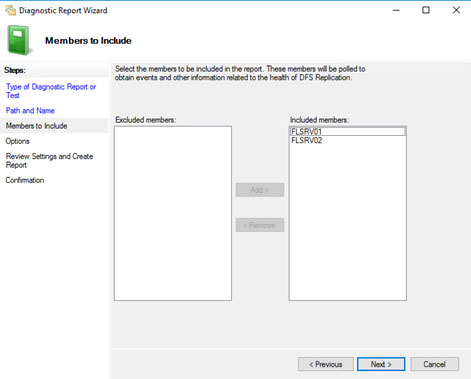

- Include all the members. Click Next

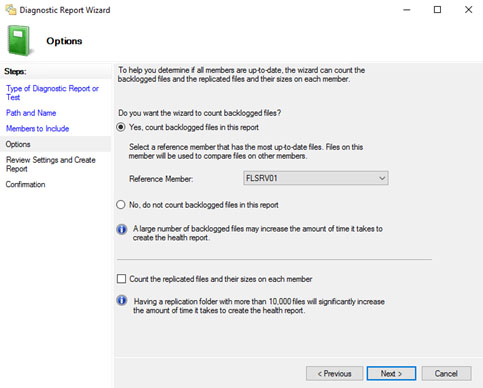

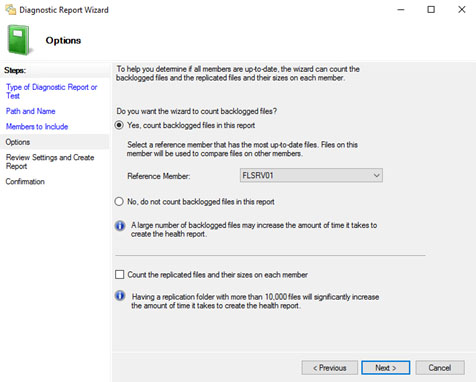

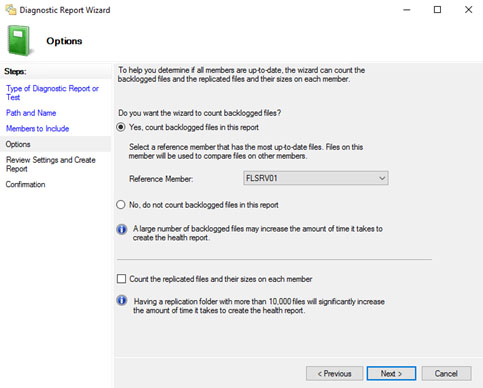

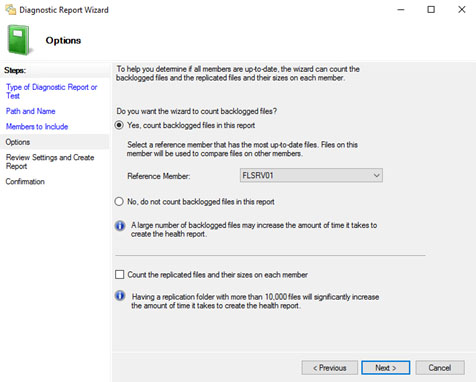

- It is a must to select Yes count backlogged files in this report.

- Select the Reference Member. It is the Server that has the latest version of the files. This is depends from you. Click Next

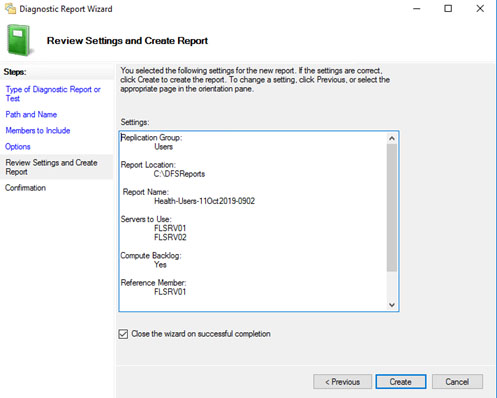

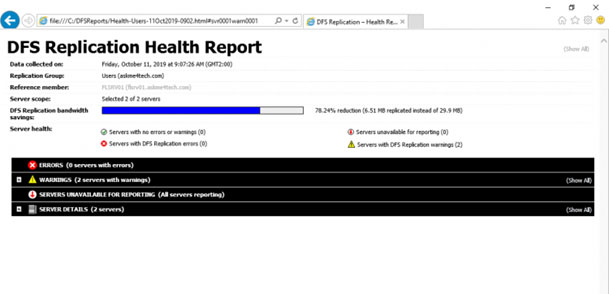

- Wait a few seconds until create the HTML Report that you can see what happened in your File Servers.

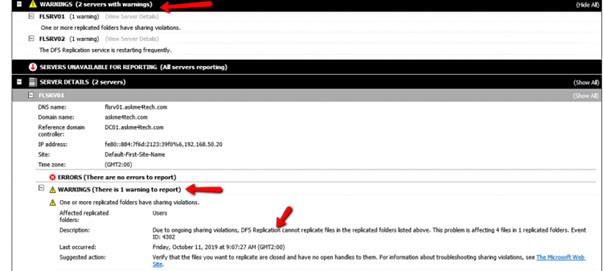

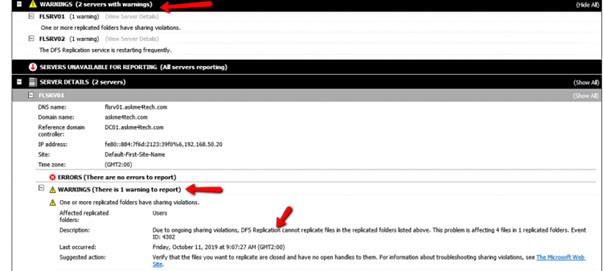

- Expand the WARNINGS or ERRORS to read what’s going on

- Health Reports has a good Reporting, but you can’t cover if you need in depth details. You can get the status but after that you must use other tools to see exactly what is going on.

Things that could impede DFSr:

1.) Locked Files

Files in use by other processes will stop DFS copying them across. Freeing up these files by closing sessions and/or running the process over night when files are not in use will enable them to be copied across.

2.) Staging Quota

This is a cache that DFS uses at a time, it is often worth judging by the largest folder in the tree.

3.) Stale Data

Due to DFS origins being used to synchronise SYSVOL files it has a default limited capture timeframe which is 60 days.

The current setting can be retrieved by:

wmic.exe /namespace:\\root\microsoftdfs path DfsrMachineConfig get MaxOfflineTimeInDays

To set the stale data run this command for 365 days:

wmic.exe /namespace:\\root\microsoftdfs path DfsrMachineConfig set MaxOfflineTimeInDays=365

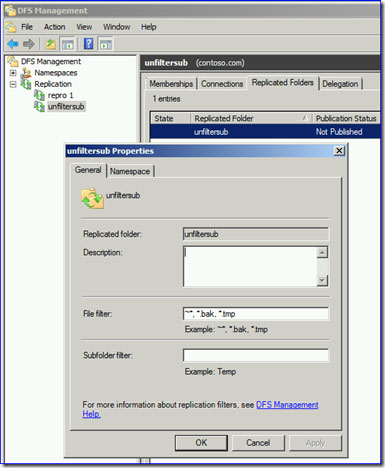

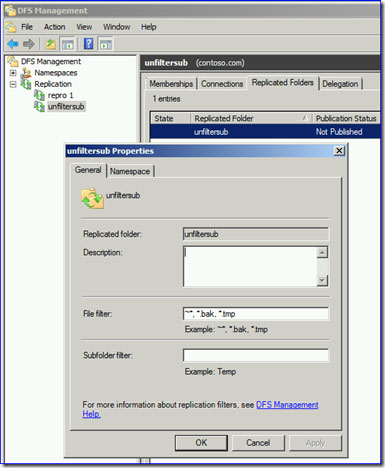

4.) Filters in DFSr

File filtration in DFS is by default set to ~*, *.bak, *.tmp. Recommend this to be removed.

5.) File Attributes

Files that are touched with certain attributes such as ‘Temporary’ will be missed by DFS. Clearing the attribute table will enable these files to be copied across.

Checking the backlog will give an idea of files that are waiting to be copied across

Running FSUTIL you can see the attributes of any given file:

fsutil usn readdata myfile.pdf

https://social.technet.microsoft.com/Forums/windowsserver/en-US/ab657345-5a8b-479d-81fb-0bd3a59dfe6d/dfsa-single-file-wont-replicate?forum=winserverfiles

6.) AV interference, firewall interference and other external factors

Note: backlog can be checked by the following command:

dfsrdiag backlog /rgname:<REPLICATION GROUP> /rfname:<FOLDERNAME> /smem:<SENDING MEMBER> /rmem:<RECEIVING MEMBER>

This can be run from a PowerShell prompt/PowerShell ISE from any machine on the domain that has connection to the servers in question.

DFS Flush - extensions from DFSUtil including flushing local referral, domain and DFS/MUP cached information (rename as a '.bat' file).

Stopping the services DFS and DFSR, deleting the cache information within 'DfsrPrivate' and restarting the services/server can kick start.

After this has been conducted monitor task manager for memory/cpu usage within Process 'Distrubuted File System Replication' and within 'Details' - dfsrs.exe and dfssvc.exe.

Finally, sometimes removing the servers and redeploying works wonders! |